AI vs. machine learning vs. deep learning: Key differences

Artificial intelligence, machine learning and deep learning are popular terms in enterprise IT, and sometimes used interchangeably, particularly when companies are trying to market their products. The terms, however, are not synonymous -- there are important distinctions.

AI refers to the simulation of human intelligence by machines. It has an ever-changing definition, as new technologies are created to simulate humans better, the capabilities and limitations of AI are revisited.

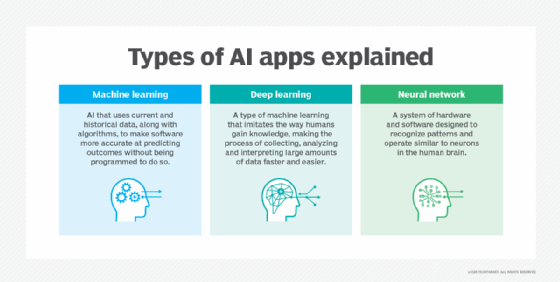

Those technologies include machine learning; deep learning, a subset of machine learning; and neural networks, a subset of deep learning.

To better understand the relationship between the different technologies, here is a primer on artificial intelligence vs. machine learning vs. deep learning.

What is artificial intelligence?

The term AI has been around since the 1950s. In short, it depicts our struggle to build machines that can challenge what made humans the dominant lifeform on the planet: our intelligence. However, defining intelligence has turned out to be rather tricky, because what we perceive as intelligent changes over time.

Early AIs were rule-based computer programs that could solve somewhat complex problems. Instead of hardcoding every decision the software was supposed to make, the program was divided into a knowledge base and an inference engine. Developers would fill out the knowledge base with facts, and the inference engine would then query those facts to arrive at results.

But this type of AI was limited, particularly as it leaned heavily on human input. Rule-based systems lack the flexibility to learn and evolve and are hardly considered intelligent anymore.

Modern AI algorithms can learn from historical data, which makes them usable for an array of applications such as robotics, self-driving cars, power grid optimization and natural language understanding.

While AI sometimes yields superhuman performance in these fields, we still have a long way to go before AI can actually compete with human intelligence.

For now, there is no AI that can learn the way humans do -- that is, with just a few examples. AI needs to be trained on mountains of data to understand any topic. We still don't have algorithms capable of transferring their understanding of one domain to another. For instance, if we learn a game such as StarCraft, we can play StarCraft II just as quickly. But for AI, it's a whole new world and it must learn each game from scratch.

Human intelligence also possesses the ability to link meanings. For instance, consider the word human. We can identify humans in pictures and videos, and AI has also gained that capability. But we also know what we should anticipate from humans: We never expect a human to have four wheels and emit carbon like a car. Yet, it's likely no AI can even tell what was wrong with the sentence I just wrote.

So, AI's definition is a moving target. We were amazed when AI algorithms got so sophisticated that they outperformed expert human radiologists but later learned about their limitations. That's why we now distinguish between the current narrow AI and the human-level version of AI that we are pursuing: artificial general intelligence (AGI). Every AI application that exists today falls under narrow AI, also called weak AI, while AGI is currently only theoretical.

What is machine learning?

Machine learning is a subset of AI; it's one of the AI algorithms we've developed to mimic human intelligence. The other type of AI would be symbolic AI or good old-fashioned AI (GOFAI), i.e., rule-based systems using if-then conditions.

Machine learning marks a turning point in AI development. Before machine learning, we tried to teach computers all the ins and outs of every decision they had to make. This made the process fully visible and the algorithm could take care of many complex scenarios.

In its most complex form, the AI would traverse a number of decision branches and find the one with the best results. That is how IBM's Deep Blue was designed to beat Garry Kasparov at chess.

But there are many things we simply cannot define via rule-based algorithms: for instance, face recognition. A rule-based system would need to detect different shapes such as circles, then determine how they are positioned and within what other objects, so that it would constitute an eye. And don't ask programmers how to code for detecting a nose!

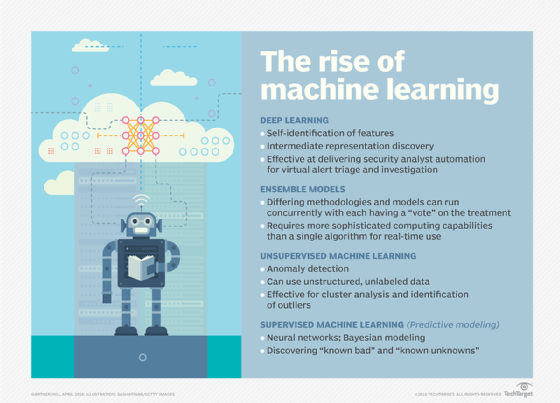

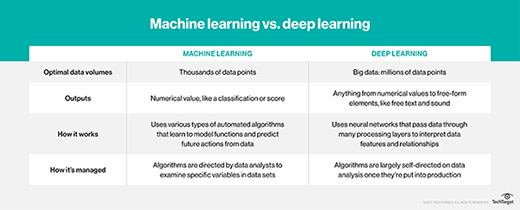

Machine learning takes an entirely different approach and lets the machines learn by themselves by ingesting vast amounts of data and detecting patterns. Many machine learning algorithms use statistics formulas and big data to function, and it is arguable that our advancements in big data and the vast data we collected enabled machine learning in the first place.

Some of the machine learning algorithms used for classification and regression include linear regression, logistic regression, decision trees, support vector machines, naive Bayes, k-nearest neighbors, k-means, random forest and dimensionality reduction algorithms.

What is deep learning?

Deep learning is a subset of machine learning. It still involves letting the machine learn from data, but it marks an important milestone in AI's evolution.

Deep learning was developed based on our understanding of neural networks. The idea of building AI based on neural nets has been around since the 1980s, but it wasn't until 2012 that deep learning got real traction. Just like machine learning owes its bloom to the vast amount of data we produced, deep learning owes its adoption to the much cheaper computing powers that became available (as well as advancements in its algorithm).

Deep learning enabled much smarter results than were originally possible with machine learning. Consider the face recognition example from earlier: In order to detect a face, what kind of data should we give to the AI and how should it learn what to look for, given that the only information we can provide is pixel colors?

Deep learning makes use of layers of information processing, each gradually learning more and more complex representations of data. The early layers may learn about colors, the next ones learn about shapes, the following about combinations of those shapes and, finally, actual objects. Deep learning demonstrated a breakthrough in object recognition and its invention quickly advanced AI on several fronts, including natural language understanding.

Deep learning is currently the most sophisticated AI architecture we have developed. Several deep learning algorithms include convolutional neural networks, recurrent neural networks, long short-term memory networks, generative adversarial networks and deep belief networks.

The difference between AI vs. machine learning vs. deep learning

AI vs. machine learning and deep learning

Contrary to AI, machine learning and deep learning have very clear definitions. What we considered AI changes over time. For instance, object character recognition used to be considered AI, but it no longer is. However, a deep learning algorithm trained on thousands of handwritings and able to learn to convert those to text would be considered AI by today's definition.

Machine learning and deep learning power various applications, including natural language processing applications, image recognition programs and classification platforms. The technologies enable enterprises to augment their workforce by allowing intelligent machines to tackle mundane, repetitive tasks, while freeing up employees to focus more on creative or more high-thinking jobs.

Machine learning vs. deep learning

Deep learning is a type of machine learning that uses complex neural networks to replicate human intelligence. Due to this complexity, deep learning typically requires more advanced hardware to run than machine learning. High-end GPUs are helpful here, as is access to large amounts of energy.

Deep learning models can typically learn more quickly and autonomously than machine learning models and can better use large data sets. Applications that use deep learning can include facial recognition systems, self-driving cars and deepfake content.

Machine learning and deep learning both represent great milestones in AI's evolution, and there will probably be many others as we head for today's AGI.

© 2021 LeackStat.com